COVID-19 and Bayesian Point Scoring

3/26/20

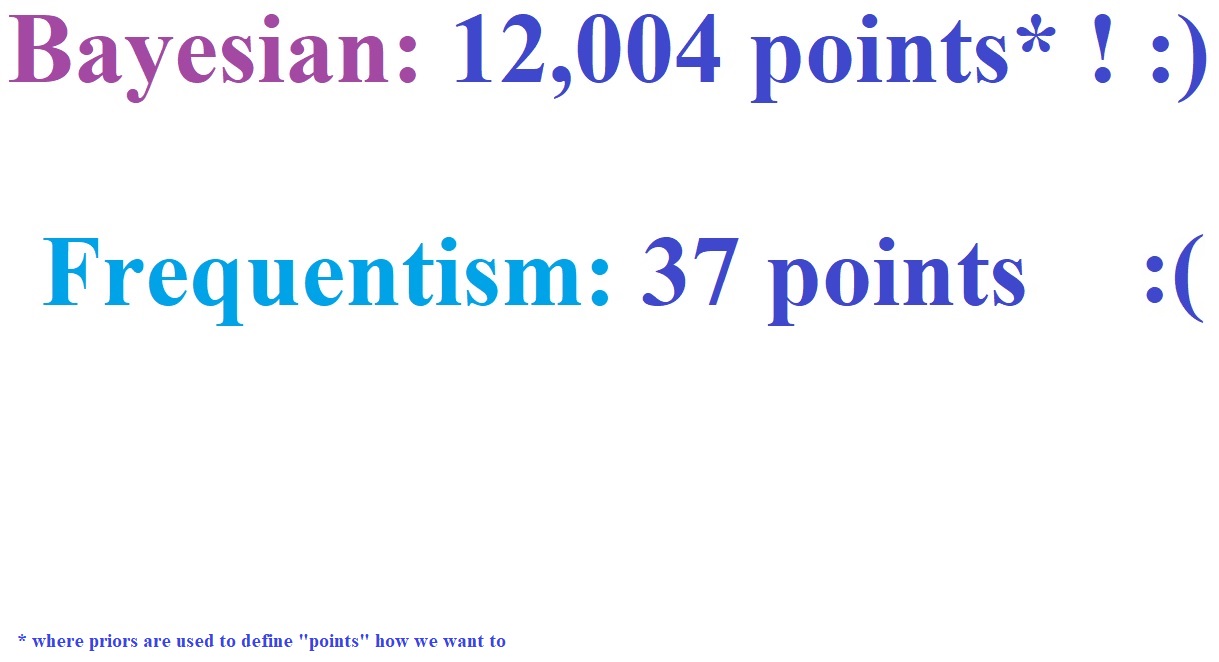

With something as serious as the COVID-19 situation, statisticians should realize this is not the proper time for engaging in "Bayesian point scoring", or writing essays about the supposed dangers of frequentism. Case in point, I came across this webpage by Harrell and Lindsell on a Bayesian approach to analyze the COVID-19 situation: Statistical Design and Analysis Plan for Randomized Trial of Hydroxychloroquine for Treatment of COVID-19: ORCHID. Their approach is interesting, but I don't understand their need to oversell arguments against frequentism and for Bayesian, and downplay, or omit, arguments for frequentism and against Bayesian. Also see their video Bayesian Sequential Designs for COVID-19 Clinical Trials.

I've discussed Harrell's views on frequentism before in Plenary Session 2.06: Frequentist Response, Definition of Probability, and Objections to Frequentism. Harrell is a great biostatistician, and I find that he is never technically wrong, but definitely biased against frequentism after his conversion to Bayesian.

In this article, the bold blue are words from Harrell and Lindsell.

Continuous learning from data and computation of probabilities that are directly applicable to decision making in the face of uncertainty are hallmarks of the Bayesian approach.

I find it odd to imply (because their article compares Bayesian to frequentist) that frequentists do not engage in "continuous learning" (when frequentists are not being lazy, oblivious stutterers, that is!). When an experiment is done, models are used, surveys are conducted, etc., one uses any information from the literature, from the experiment, from the survey, from subject matter experts, etc., adaptive design, sequential analysis, study limitations, lessons learned, to improve for the current or future experiments, reject or add to models, continuous process improvement, etc. Bayesians have not cornered the market on "continuous learning" as the authors imply.

I would also opine that the Bayesian "probabilities" mentioned are not probabilities. It can be argued that they are more accurately described as uncertainty, chance, belief, personal probability, or subjective probability. A more objective and standard definition of "probability" is converging relative frequency from a repeating process that converges irrespective of place selection, as discussed by von Mises and backed up by Kolmogorov, not something coming from possibly subjective brittle priors, MCMC settings, worked through to a posterior distribution, integrated over arbitrary cutoffs, that can differ from person to person.

Classical null hypothesis testing only provides evidence against the supposition that a treatment has exactly zero effect, and it requires one to deal with complexities if not doing the analysis at a single fixed time.

I find the wording "only" strange. A frequentist analysis, which is more than just null hypothesis significance testing, would not only do significance tests but also discuss the science, experimental design, show graphs, models, test assumptions, show point estimates, predictions, confidence intervals, do equivalence tests, and so on.

Imagine that the military has developed a pattern recognition algorithm to determine whether a distant object is a tank. Initially an image results in an 0.8 probability of the object being a tank. The object moves closer and some fog clears. The probability is now 0.9, and the 0.8 has become completely irrelevant.

Contrast with a frequentist way of thinking: Of all the times a tested object wasn't a tank, the probability of acquiring tank-like image characteristics at some point grows with the number of images acquired. The frequency of image acquisition (data looks) and the image sampling design alter the probability of finding a tank-like image, but that way of looking at the problem is divorced from the real-time decision to be made based on current cumulative data. An earlier tank image is of no interest once a later, clearer image has been acquired.

Nothing in this thought experiment would be an issue for frequentism. In fact, pattern recognition/machine learning is done quite often, military or otherwise, with or without Bayesian methods. The standard Bayes rule is very much in the frequentist domain when it involves general events and not probability distributions on parameters. Because we are using hypotheticals, consider the hypothetical example of a .10 "probability" of being a tank, and when the fog clears the "probability" is 100 and you have been blown up by the tank. Bad prior? I would also even argue that the statement "the 0.8 has become completely irrelevant" is wrong because the 0.8 can be used in an updating process to get the 0.9.

The traditional approach limits the number of data looks and has a higher chance of waiting longer than needed to declare evidence sufficient. It also is likely to declare futility too late.

But all these scary sounding hypotheticals can be turned around. For example, Bayesian approaches don't properly adjust for multiplicities, are not conservative, allow for more p-hacking (priors, hyperparameters, MCMC settings) and double-dipping of data (prior and posterior checks). When they declare usefulness, they are likely to declare it based on an unreliable small sample and possibly subjective brittle priors, and do it overconfidently, which is not warranted.

Since the prior distribution's influence fades as the sample size grows, the amount of discounting of the treatment effect lessens.

As the statistician Edwards said

"It is sometimes said, in defence of the Bayesian concept, that the choice of prior distribution is unimportant in practice, because it hardly influences the posterior distribution at all when there are moderate amounts of data. The less said about this 'defence' the better."

While it is possible to pre-specify decision triggers such as "we will act as if the drug is effective if the probability of efficacy exceeds 0.95," but there are always trade-offs against side-effect profiles and other considerations that are yet unknown, so FDA and other entities make decisions on the basis of totality of the evidence.

Yes, exactly, but the same applies to frequentism. I find that a lot of bad critiques of frequentism act as if frequentists make decisions solely based on a single statistic (i.e. p-value), which is not the case. Decisions are, or should be, always based on the totality of evidence, scientific, medical, costs, etc.

A recently published COVID-19 clinical trial (and discussed here) that has been widely misinterpreted as demonstrating no benefit of a drug just because the p-value did not meet an arbitrary threshold thus committing the "absence of evidence is not evidence of absence" error (in spite of the confidence interval being too wide to warrant a conclusion of no benefit).

I have not heard of this paper being "widely misinterpreted" whatsoever. In fact, the study has been praised for doing a well-designed randomized control trial during epidemic conditions. And, "just because the p-value did not meet an arbitrary threshold" is not correct. The authors of that study did not make their conclusion solely on a single p-value. Second, the "absence of evidence is not evidence of absence" is a good logical fallacy to be aware of, but it does not apply here. If I have a well-designed experiment and I flip a coin 100 times, and do this 5 times, and get 50, 48, 52, 53, and 47 heads, this is evidence the coin is fair. In other words, the results of the experiments are the evidence that inform the result of the hypothesis test. In other words, there is no absence of evidence fallacy going on here. There is evidence from their trial, and we can of course debate the quality of such evidence. The researchers stated clearly that more study is needed. Abandoning treatments that work can be bad, but embracing treatments that don't work can be too.

Prior Distributions

As recommended by our current research, intercepts will be given essentially nonparametric distributions using the Dirichlet distribution. The treatment effect, appearing as a log odds ratio in the PO model, will have a normal distribution such that

Prior P(OR>1)=P(OR<1)=0.5 (equally likely harmful as beneficial)

Prior P(OR>2)=P(OR<12)=0.1 (large effects in either direction unlikely)

The variance of the prior normal distribution for log OR is computed to satisfy the above tail areas.

For the adjustment covariates, the prior distributions will be normal with mean 0 and much larger variances to reflect greater

uncertainty in their potential effects.

There will need to be an extensive sensitivity analysis reported, of course.

There were numerous other misunderstandings about frequentism on their webpage, but I lost interest after a while.

Another example I came across is Corona and the Statistics Wars at Bayesian Spectacles. They write As the corona-crisis engulfs the world, politicians left and right are accused of "politicizing" the pandemic. In order to follow suit I will try to weaponize the pandemic to argue in favor of Bayesian inference over frequentist inference. It doesn't dawn on the author that scientists successfully study epidemics using frequentist or non-Bayesian methods often. Also, the same old fallacies were raised of frequentists don't do prediction or learn from data or have sequential methods and only care about p-values.

For a more balanced discussion of frequentism, likelihood, Bayesian, inference, philosophy, science, etc., I strongly recommend checking out Mayo's book Statistical Inference as Severe Testing: How to Get Beyond the Statistics Wars.

Thanks for reading.

Please anonymously VOTE on the content you have just read:

Like:Dislike: